Release Management in Multi-Cloud Environments: Navigating Complexity for Startup Success

When starting a successful startup, it can take time to select the right provider. "All workloads need more options, and some may only be met by a specific alternative." Individuals are not constrained to utilizing a solitary cloud platform.

The multi-cloud paradigm integrates many computing environments, differing from hybrid IT. This particular choice is seeing a growing trend in popularity. Besides, managing multi-cloud setups is challenging due to their inherent complexity. Before deploying to many clouds, consider important factors.

When businesses need different cloud services, some choose to use many providers. This is called a multi-cloud strategy, and it helps reduce the risk of problems if one provider has an issue. A multi-cloud strategy can save time and effort and deal with security concerns.

This is called a multi-cloud strategy, and it helps reduce the risk of problems if one provider has an issue. Managing multi-cloud environments requires considering security, connectivity, performance, and service variations.

The Significance of Release Management#

The expectations of the release management system maintain the software development process. Software release processes vary based on sector and requirements. You can achieve your goals by creating a personalized and well-organized plan.

For software readiness scheduling, it is necessary to test its capacity to complete assigned tasks. Multi-cloud environment Release management could be challenging. It is due to many providers, services, tools, and settings. This can make the process more complicated.

Challenges of Multi-Cloud Release Management#

No, let's discuss some difficulties associated with multi-cloud adoption. Firstly, each cloud service provider has different rules for deploying and managing apps. If you use many cloud providers, your cloud operations strategy will consist of a mixture of all. These are the primary difficulties in managing workloads across various cloud service providers:

Compatibility#

The challenging task of connecting cloud services and apps across various platforms. Companies must invest in integration solutions for efficiency across many cloud platforms. Standardized integration approaches can improve multi-cloud environments' interoperability, flexibility, and scalability. Every cloud platform has its integration procedures and compatibility requirements in today's world.

Security#

Cloud security requires shared responsibility. It would help if you took appropriate measures to protect data, even with native tools available. Cloud service providers rank native security posture management, which includes cost management tools. However, these tools only provide security ratings for workloads on their respective platforms.

Navigation through several tools and dashboards is needed to ensure cloud safety. This gives you access to individual silos. But requires providing a picture of the security posture of your many cloud installations. This perspective makes ranking the vulnerabilities and finding ways to mitigate them easier.

Risk of Vendor Lock-in#

Companies choose multi-cloud to avoid lock-in and use many providers. To manage these settings while preventing the risk of vendor lock-in, do pre-planning.

To avoid vendor lock-in, use open standards and containerization technologies like Kubernetes. You can use it for application and infrastructure portability across many cloud platforms. Remove dependencies on specific cloud providers.

Cost Optimization#

A multi-cloud approach leads to an explosion of resources. Only infused cloud resources can save your capital investment. It would help if you tracked your inventory to avoid such scenarios.

Every cloud service has built-in tools for cost optimization in cloud architecture. Yet, in a multi-cloud setting, it is vital to centralize your cloud inventory. This enables enterprise-wide insight into cloud usage.

You may need to use an external tool designed for this purpose. It's important to remember that optimizing costs rarely works out well. Instead, it would help if you were tracking the extra-cost resources by being proactive.

Strategies for Effective Release Management#

Now, we'll look at the most effective ways to manage a multi-cloud infrastructure.

Manage your cloud dependencies.#

Dependencies and connections across various cloud services and platforms can be challenging. Particularly to manage in a hybrid or multi-cloud setup. Ensure your program is compatible with the required cloud resources and APIs.

To lessen dependence on the cloud, use abstraction layers of cloud-native tools. It would help if you also used robust security measures and service discovery.

Multi-Cloud Architecture#

There could be application maintenance and service accessibility issues during cloud provider outages. To avoid such problems, design them to be fault-tolerant and available. Use many availability zones or regions within each provider.

This will help you to build a resilient multi-cloud infrastructure.

This may be accomplished through the use of many cloud providers. This can assist you in achieving redundancy and reduce the chances of a single point of failure.

Release Policy#

You can also divide your workloads across various cloud environments. The multiple providers can assist you with a higher level of resiliency. Release management can only function well with a policy, as with change management.

This is not an excuse to go all out and put a lot of red tape over things. But, it is a chance for you to state what is required for the process to operate.

Shared Security#

Using the shared security model makes you responsible for certain cloud security parts. At the same time, your provider handles the other cloud security components.

The location of this dividing line might change from one cloud provider to another. You can only assume that some cloud platforms provide the same level of protection for your data.

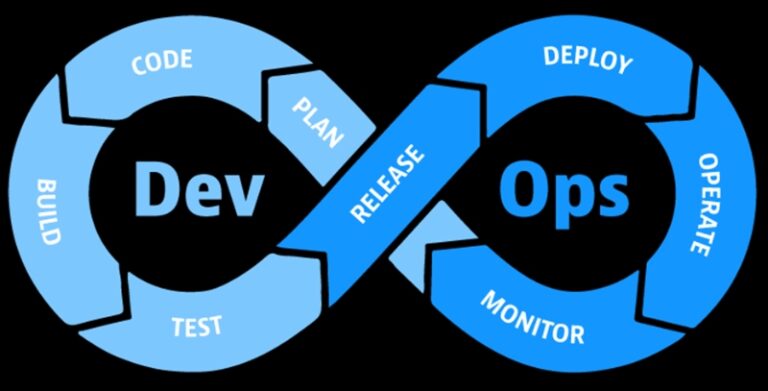

Agile methodology#

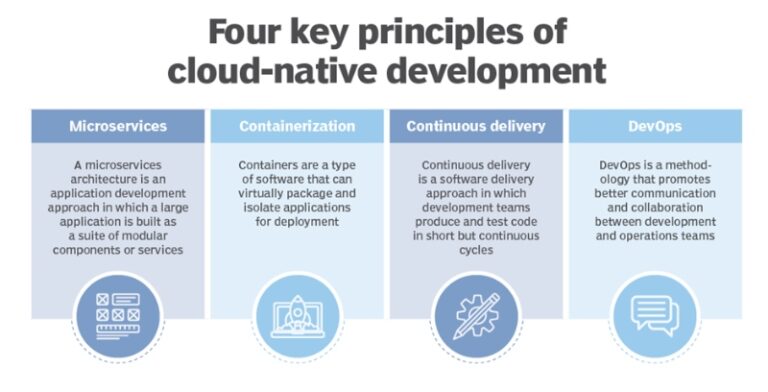

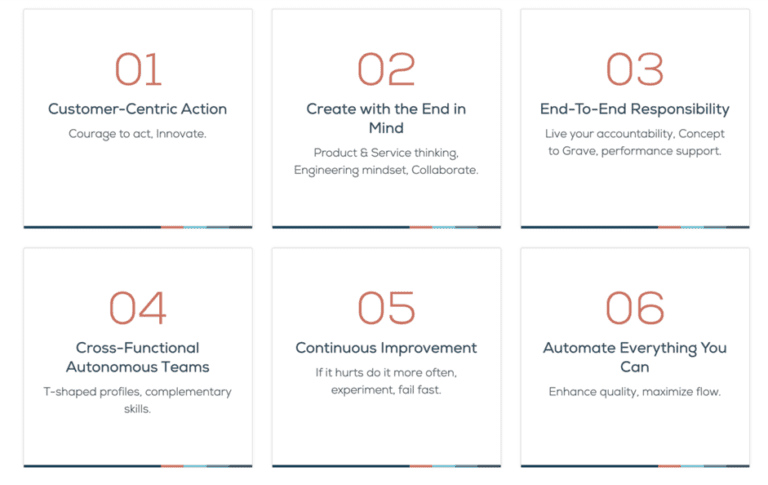

In managing many clouds, we must incorporate DevOps and Agile methodologies. DevOps method prioritizes automation, continuous integration, and continuous delivery. This allows for faster development cycles and more efficient operations.

Meanwhile, Agile techniques promote collaboration, adaptability, and iterative development. With this, your team can instantly respond to changing needs.

Choosing the Right Cloud Providers#

Finding the right partners/cloud providers for implementing a multi-cloud environment is essential. The success of your multi-cloud environment depends upon the providers you choose. Put time and effort into this step for a successful multi-cloud strategy deployment. Choose a cloud partner that has already implemented multi-cloud management.

Discuss all the aspects before starting work with the cloud providers. It would help if you discussed resource needs, scalability choices, data migration simplicity, and more.

Product offering and capabilities:#

Every cloud provider has standout and passable services. Each cloud service provider has different advantages for different products. It would help if you investigated to get the finest cloud service provider for your needs.

Multi-cloud offers the ability to adjust resource allocation in response to varying demands. Select a service provider who offers adaptable plans so you may scale up or down as needed. AWS and Azure are interchangeable as full-fledged cloud providers of features and services. But, one cloud storage service may be preferable to another for a few items.

You may have SQL Server-based apps within your enterprises. These apps are well suited for integrating with an intelligent cloud and database. As a result, if you can only work in the cloud, Azure SQL may be your best choice.

If you wish to use IBM Watson, you may only be able to do so through IBM's cloud. Google Cloud may be the best choice if your business uses Google services.

Ecosystem and integrations#

You must verify if the supplier has a wide range of integrations with the software and services. You can check it with the apps or programs your company has already deployed. Your team's interactions with the chosen vendor will be simplified. You should also check that there are no functionality holes. That's why working with a cloud service offering consulting services is preferable.

Transparency#

It would help if you considered data criticality, source transparency, and scheduling for practical data preservation. Besides, it also feels like backup, restoration, and integrity checks are extra measures for security. Clear communication of expected outcomes and parameters is crucial for cloud investment success. Organizations can get risk insurance for recovery expenses beyond the provider's standard coverage.

Cost#

Most companies switch to the cloud because it's more cost-effective. The price you pay for products and services different clouds offer may vary. When choosing a business, the bottom line is always front and center.

It would be best if you also thought about the total cost of ownership. This includes the price of resources and support. Also, consider additional services you may need when selecting a cloud service provider.

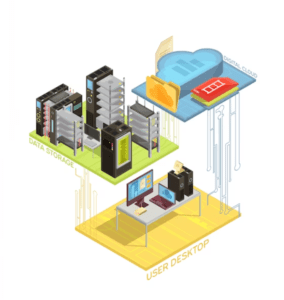

Tools and Technologies for Multi-Cloud Release Management#

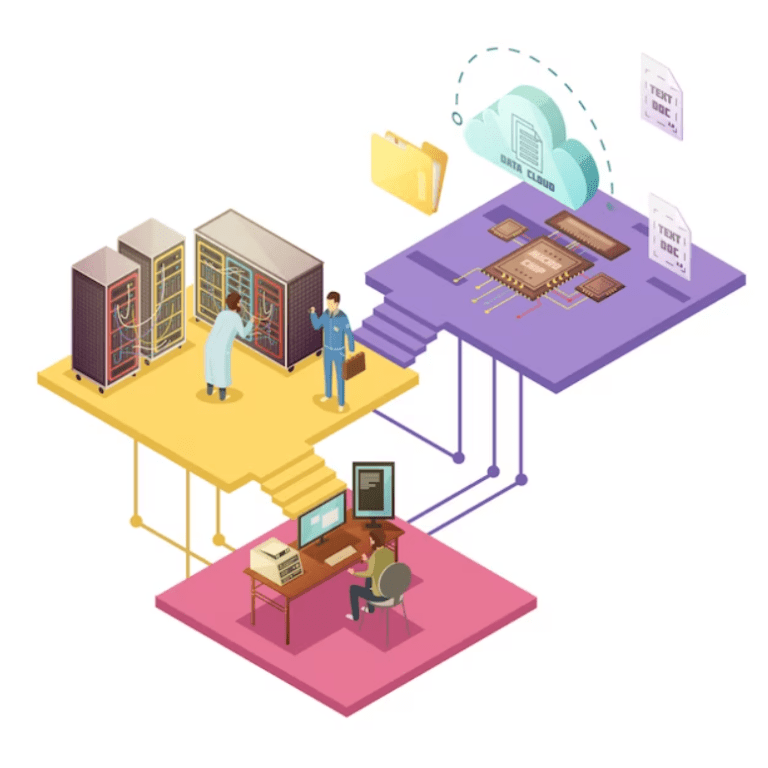

A multi-cloud management solution offers a single platform for monitoring, protecting, and optimizing several cloud deployments. There are a lot of cloud management solutions available in the market. For managing a single cloud, these are excellent choices. But there are also other cross-cloud management platforms. You can use any one of them as per your need right now.

These platforms can increase cross-cloud visibility and cut the optimizing tools. This will eliminate the need for tracking and optimizing your multi-cloud deployment. Both of these goals may be accomplished through the usage of these platforms.

Containerization#

The release administration across many clouds relies on containers like Docker. They enclose apps and the dependencies necessary for running them. Besides, they also guarantee consistency across a wide range of cloud settings. The universality reduces the compatibility difficulties, and the deployment process is streamlined. This makes it an essential tool for multi-cloud implementations.

Orchestration#

Orchestration solutions are particularly effective when managing containerized applications spanning several clouds. They ensure that applications function in complex, multi-cloud deployments. Orchestration tools like Kubernetes provide automated scaling, load balancing, and failover.

Infrastructure as Code (IaC)#

IaC technologies are vital when provisioning and controlling infrastructure through code. It maintains consistency and lowers the risk of errors due to human intervention. This makes replicating infrastructure configurations across many cloud providers easier.

Continuous Integration/Continuous Deployment (CI/CD)#

Pipelines for continuous integration and delivery automate the release process's fundamental aspects. The automation includes testing, integration, and deployment. This enables companies to have a consistent release pipeline across several clouds. This further helps to encourage software delivery that is both dependable and quick. Companies can go for tools like Jenkins and GitLab CI.

Configuration Management#

You can make configuration changes across many cloud environments using Puppet and Chef. This guarantees that server configurations and application deployments are consistent. Meanwhile, lowering the risk of configuration drift and improving the system's management capacity.

Security and Compliance Considerations#

Security and compliance are of the utmost importance in multi-cloud release management. To protect the authenticity of the data and follow the regulations:

- Data Integrity: To avoid tampering, encrypt the data while it is in transit and stored. You can use backups and confirm the data.

- Regulatory Adherence: This includes identifying applicable regulations and automating compliance Procedures. Along with this, regular auditing is necessary for adherence to rules.

- Access Control: Ensure only authorized workers can interact with sensitive data. You can establish a solid identity and access management system or IAM. This will govern user access as well as authentication and authorization.

Businesses can manage multi-cloud systems by addressing these essential components while securing data. Follow compliance standards, lowering the risks associated with data breaches and regulatory fines.

Future Trends in Multi-Cloud Release Management#

The exponential demand and development have resulted in significant trends in recent years. These trends will push the integration of multi-cloud environments faster than ever. Let's explore the top trends that will shape the future.

Edge Computing#

Edge computing is one of the most influential innovations in multi-cloud architecture. It extends from the central computer's hub to the periphery of telecommunications. Further extends to other service provider networks. From the networks, it goes to the user locations and sensor networks.

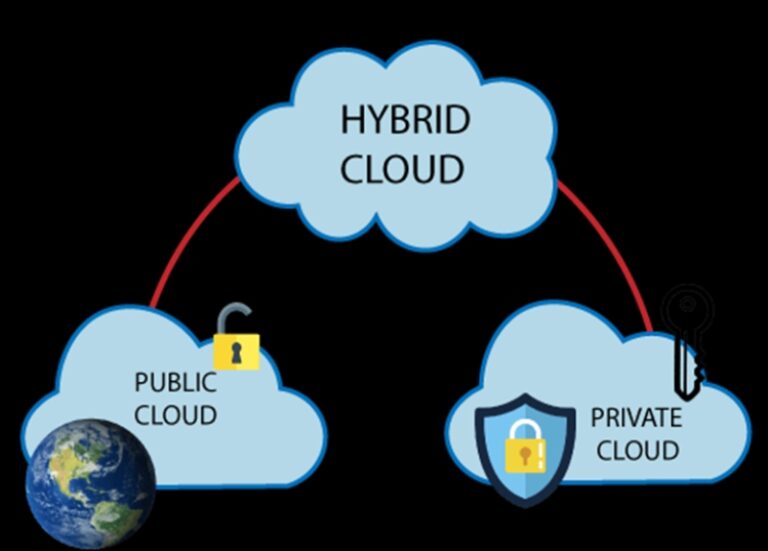

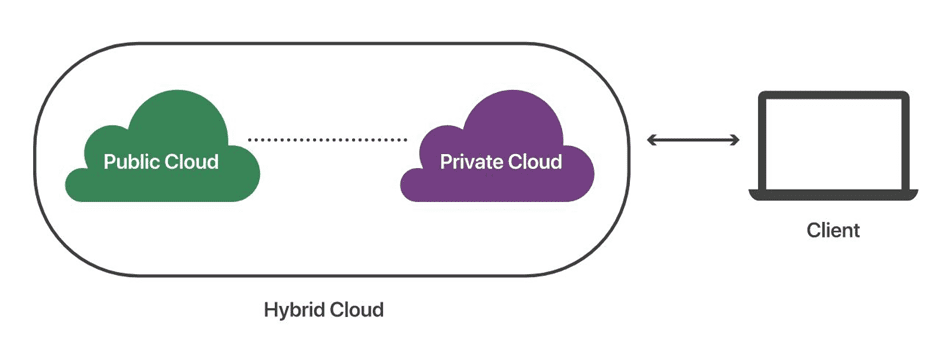

Hybrid Cloud Computing#

Most companies worldwide are beginning to use hybrid cloud computing systems. The reason is to improve the efficiency of their workflows and production.

According to the data, businesses will almost switch to multi-cloud by the end of 2023. The reason is that it is an optimal solution for increased speed, control, and safety.

Using Containers for Faster Deployment#

Using containers to speed up the deployment of apps is one of the top multi-cloud trends. Using container technologies, you can speed up building, packaging, and deploying processes.

The developers can focus on the application's logic and dependencies with containers. This is because the containers offer a self-contained environment.

Meanwhile, the operations team can focus on delivering and managing applications. There is no need to be concerned about the platform versions or settings.

Conclusion#

Multi-cloud deployment requires an enterprise perspective with a planned infrastructure strategy. Outsourcing multi-cloud management to third-party providers ensures seamless operation. Innovative multi-cloud strategies integrate public cloud providers. Each company needs to figure out what kind of IT and cloud strategies, in particular, will work best for them.