Why NGINX in Docker Can Be Difficult to Use and How to Solve Typical Problems Like an Expert

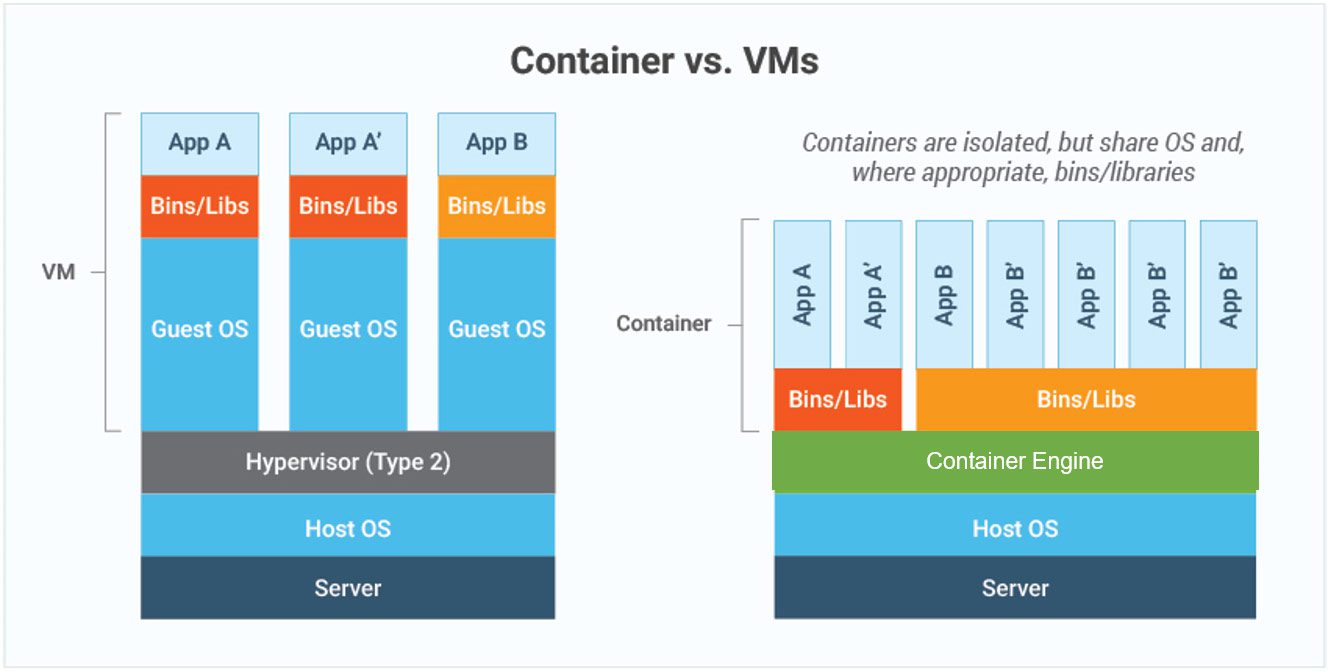

Before we dive into troubleshooting, let's talk about why you'd even want to run NGINX in a Docker container. The main reasons are portability, scalability, and isolation.

Portability#

NGINX (and any other service) may be packaged using Docker into a container that can run on your home computer, in staging, or in production.

Scalability#

Microservices architecture and NGINX in Docker work nicely together. To manage varying traffic loads, you can run numerous instances of NGINX, load balance amongst containers, and grow your application with ease.

Isolation#

NGINX can be isolated from the rest of your system by executing it in a container, which lessens the likelihood of problems with other programs or settings. It also facilitates deployments.

But with all that flexibility comes a bit of complexity—especially when things go wrong. So, let's get into how to diagnose and fix those issues.

Common Issues You Might Face With NGINX in Docker#

1. NGINX Fails to Start - "host not found in upstream"#

This error pops up when NGINX can't resolve the hostname for a service you're proxying to. It usually happens in a Dockerized setup because the internal DNS system might not be resolving the container names properly.

Why does this happen?#

Docker uses a virtual network to connect containers, and when NGINX is trying to reach a service (e.g., nginx-exporter for metrics), it may fail to resolve that name to an IP address. This could be because the container hosting the service isn't on the same network or hasn't started yet.

How to fix it:#

- Verify the network configuration: Verify that the upstream service and NGINX are on the same Docker network.

- Use service names: Docker service names can be used in place of hardcoding IP addresses. For instance, you can use the service name of another container to refer to it in a

docker-compose.ymlfile. - Await dependencies: Make sure NGINX waits for the dependant services to be operational. To specify the start-up sequence, you can utilise technologies like Docker's

depends_on.

2. Access Denied to NGINX Status or Metrics#

You may not be able to access the NGINX stub status or metrics endpoint from outside the container if you have enabled it (e.g., /stub_status or /metrics). Misconfigured network settings or access control settings are frequently to blame for this.

Why does this happen?#

Docker containers are environments that are segregated. You will receive a "403 Forbidden" or "connection refused" error if the requests are coming from a different IP (such as a reverse proxy or monitoring tool), even though NGINX may be set up to only let specific IPs to access the status endpoints.

How to fix it:#

- Modify the allow/deny directives: Verify that the right IPs are permitted access to those endpoints in your NGINX configuration. For instance, you must include the IP address of the machine or network you are monitoring from in the allow directive.

- Verify that the client IP is visible and that the proxy is passing through the appropriate headers if you are behind another reverse proxy, such as Traefik or HAProxy.

3. Configuration errors: Inaccurate or missing settings#

When things go awry, misconfigured NGINX settings are frequently to blame. Erroneous file paths, missing environment variables, or erroneous nginx.conf file settings can all result in unanticipated failures.

What causes this to occur?#

Typically, environment variables, mounted files, or Docker volumes are used to configure Docker containers. NGINX might not start or act strangely if you're not diligent about mapping these correctly or passing the appropriate variables.

How to fix it:#

- Examine the

nginx.conffile: Verify your NGINX configuration file again for any missing settings or syntax mistakes. To make sure there are no problems, it's also a good idea to runnginx -tto test your setup. - Environment variables: Verify that the environment variables are being sent to the container correctly if you're depending on them for configuration. By using

docker inspectto examine the container that is currently operating, you can confirm this.

Best Practices for Running NGINX in Docker#

1. Use NGINX Images with Multi-Stage Builds#

Always use multi-stage builds when making Docker images for NGINX in order to minimise the size of the final image. If you're creating a custom NGINX image with modules or other dependencies, this is especially crucial. You may prevent superfluous build tools from bloating your image by keeping the build and runtime phases separate.

2. Use Docker Compose to Set Up Multiple Containers#

Use Docker Compose if your application includes several services (such as databases, app servers, and NGINX). Defining and managing multi-container configurations is made simple with this tool. To make sure that NGINX can always locate its upstream services by their container names, you may also make use of Docker Compose's integrated networking functionality.

3. Use Volumes to Your Advantage for Long-Term Data#

Mount NGINX's configuration files and log folders to Docker volumes to prevent data loss during container restarts. In this manner, even if you have to recreate the container, your settings will remain intact.

4. Use external tools and Docker logs to keep an eye on NGINX#

To gain a better understanding of NGINX's performance, use monitoring tools such as Prometheus or Grafana, and use docker logs to examine NGINX logs directly. To learn more about the performance of your server, you can expose and scrape NGINX analytics.

5. Keep Security in Mind#

- Restrict access to sensitive endpoints: Always limit trustworthy IPs' access to

/stub_statusor/metrics. - Use NGINX with as few rights as possible: Don't use Docker to run NGINX as root. To reduce the attack surface, utilise the least privileged user.

- To make sure NGINX is operating well, use health checks. With Docker's HEALTHCHECK directive, you may specify a command to check if NGINX is operational. In a production setting, where you have to ensure that your container is in good condition and responding to requests, this is crucial.

Wrapping It Up#

Although NGINX in Docker is a great option for isolated, scalable web infrastructure, it does have some potential drawbacks. At first glance, debugging Dockerized NGINX can appear complex, ranging from misconfigurations to problems with service connectivity. But you'll be well on your way to mastering this potent mix if you know about typical issues and best practices.

Therefore, don't panic the next time you encounter a problem! You can quickly get your NGINX container up and running by reviewing your configurations, checking your logs, and implementing some of these best practices.

To learn more about the docker deployment options, check out our

How to Deploy Your Image.

For a complete overview of our platform, visit Nife's homepage.