AWS DevOps Tools are essential for modern software delivery, enabling streamlined collaboration, automated workflows, and scalable infrastructure management, ensuring faster time-to-market and improved application reliability.

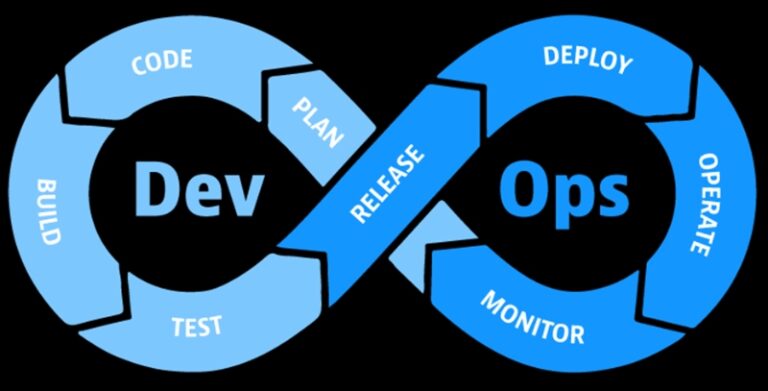

The software development space is changing faster than ever. Traditional software development model is slow, inefficient, and incapable of dealing with changing customer needs. DevOps emerged as a game changer and revolutionized the software development lifecycle. DevOps is a set of practices that promotes automation, collaboration, and communication to achieve efficient and more reliable software delivery.

Release management is a critical aspect of DevOps. It ensures software releases are reliable, efficient, and flawless for the end user. Efficient software release management maintains the quality of code and increases customer satisfaction.

Businesses can streamline their software delivery and implement DevOps practices with Amazon Web Services (AWS). AWS is one of the most prominent and reputed cloud service providers. AWS provides robust DevOps tools that allow you to automate various stages of your software delivery process.

In this article, we'll cover AWS DevOps tools and how these tools empower organizations to streamline their software delivery process. From CodePipeline to CodeDeploy, we'll cover DevOps automation, collaboration, and continuous integration. Read the full article for complete insights.

Understanding DevOps and Software Release Management#

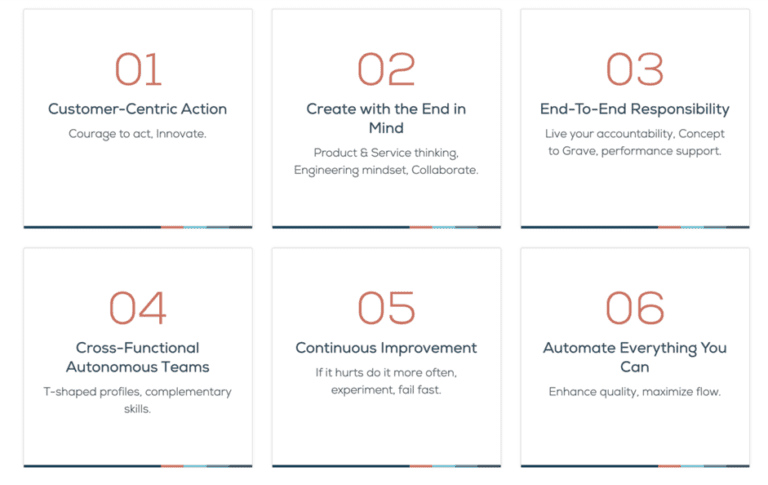

DevOps is a revolutionary approach to software development. DevOps embraces collaboration and communication between development and operation teams rather than traditional siloed communication. DevOps ensures efficient and reliable software delivery. Here are some core DevOps principles.

DevOps automation: DevOps embraces the use of automation tools. Automation tools allow you to reduce manual effort and automate repeated tasks. Automation makes the software development process predictable and streamlined.

Collaboration: At the heart of DevOps lies Collaboration. DevOps embraces collaboration and communication between different teams. DevOps promotes collaboration by creating cross-functional teams with diverse skill sets. The goal is to create a sense of shared responsibility and accountability.

Continuous Integration: CI is integrating code changes into a shared repository. In this shared repository the code is then tested automatically. This allows developers to catch any errors at the early development stage.

Continuous Delivery: Through CD, code changes are automatically deployed after proper testing and quality assurance.

The software release management process is crucial for deploying software efficiently and reliably into production. It consists of planning, coordinating, and overseeing. Release management in DevOps ensures smooth software delivery. Key responsibilities in the software release management process are as follows:

Planning: Planning is crucial for software release management. Every step in the release management is planned out with a proper timeline.

Version Control: Version control is another crucial aspect of release management in DevOps. The code version is managed to ensure the correct version is deployed. Version control also helps identify problems faster.

Automated Deployment: Another crucial aspect of the software release management process is automated deployment. It ensures code changes and updates are deployed automatically without any human effort. As a result, deployment becomes predictable and errors are reduced.

Monitoring and Rollback: Monitoring software after code changes is essential to ensure proper working. You should have a rollback plan in case of any problems.

The benefits of release management in DevOps are:

- Faster time to market

- Reduced Downtime

- Improved Quality

- Enhanced Collaboration

Leveraging AWS DevOps Tools#

Amazon Web Services (AWS) is one of the most prominent cloud-based service providers. AWS provides organizations with cloud-based services, including storage, databases, computing power, etc. AWS offers plenty of resources that can handle varying workloads with ease.

AWS also provides a collection of DevOps tools with unique capabilities for software release management. These tools work together to provide smooth software delivery. Here are some crucial AWS DevOps tools.

AWS CodePipeline: CodePipeline allows teams to create custom pipelines with multiple stages. automating the build, test, and deployment process.

AWS CodeCommit: CodeCommit provides a safe repository for developers. All the code changes merged by developers are stored in AWS CodeCommit. It can seamlessly integrate with other AWS services and third-party services.

AWS CodeBuild: Codebuild is a building service that runs automated tests on the source code to identify any bugs or problems. It ensures the source code is in deployable condition all the time.

AWS CodeDeploy: CodeDeploy automatically deploys the software in different environments to ensure functionality. It deploys applications on Amazon EC2 instances, Lambda functions, and even on-premises servers, ensuring consistent and reliable deployments at scale.

By integrating these tools, development teams can automatically trigger builds, run tests, and deploy code updates, reducing manual effort and accelerating software delivery.

Supercharging Software Delivery with AWS DevOps Tools#

Benefits of using AWS DevOps tools in the release management process#

Accelerated Delivery: With AWS DevOps automation, various aspects of the software delivery process can be automated. Automation reduces the chances of human error and makes the software more reliable and predictable. Automation also enables faster time to market for new features.

Continuous Integration and Continuous Delivery(CI/CD): AWS CodePipeline and CodeBuild enable CI/CD. Code changes from multiple developers are added to AWSCodeCommit, which then triggers an automated build, test, and deployment cycle.

Scalability and Flexibility: AWS provides a highly scalable and flexible infrastructure, allowing teams to adapt to changing project requirements and handle varying workloads efficiently. DevOps teams can utilize cloud resources on-demand, optimizing costs and resource utilization.

Real-life success stories of organizations leveraging AWS DevOps for accelerated delivery:#

Netflix: Netflix relies on AWS services like AWS CodePipeline and AWS CodeDeploy to manage its vast microservices architecture. Netflix is one of the first companies leveraging AWS DevOps for accelerated delivery.

Airbnb: Airbnb migrated its infrastructure to AWS and embraced DevOps practices, leading to shorter release cycles and faster time-to-market for product updates. They leveraged AWS DevOps tools to automate testing, deployment, and monitoring, improving application reliability and better user experience.

How AWS DevOps tools enable better collaboration and communication among teams:#

Centralized Code Repository: AWS CodeCommit provide a code repository for developers. Changes from all the developers are shown here using a version control system. This increases collaboration and ensures everyone has an updated copy.

Automated Testing and Feedback Loop: WS CodePipeline integrates with various testing tools, allowing automated testing of code changes. When a test fails, feedback is provided to the development team, prompting them to address issues promptly.

DevOps as a Service (DaaS) with AWS#

DevOps as a Service (DaaS) is a game-changing approach that leverages AWS's cloud capabilities to provide DevOps tools and services on a subscription basis. With DevOps as a Service, organizations can offload the operational burden of managing complex DevOps infrastructure, allowing them to focus on software development and innovation.

Among AWS's DaaS offerings are managed CI/CD pipelines, version control systems, and automated testing frameworks. Businesses can quickly increase agility, reduce time-to-market, and scale development processes with DaaS.

As technology evolves, organizations can stay ahead by embracing DevOps as a Service (DaaS) with AWS to optimize resource utilization, streamline software delivery, and stay ahead of the competition.

Introduction to Nife: A Cloud Computing Platform for Businesses#

Nife Labs is a revolutionary global edge application platform that empowers businesses and developers with a game-changing cloud computing solution. This cutting-edge platform allows rapid application deployment across any infrastructure, leading to faster scaling and simplified management.

Nife Labs allows businesses to focus on their core competencies, accelerating their software delivery processes, and eliminating manual and time-consuming tasks.

Nife Labs' unique features include the ability to deploy applications to any region or location without infrastructure concerns, real-time monitoring with customizable reports and alerts, and the flexibility to manage and extend applications based on specific criteria. These features streamline application management and enhance performance across various geographical locations.

Supercharge your software delivery with Nife!

Conclusion:#

In conclusion, release management in DevOps, amplified by the power of AWS DevOps tools, unlocks a realm of efficient software delivery. Embracing DevOps automation, collaboration, and continuous integration, teams can orchestrate a seamless CI/CD pipeline with AWS CodePipeline, CodeCommit, CodeBuild, CodeDeploy, and CodeStar. Leveraging DevOps as a Service (DaaS) on AWS brings scalability and productivity, enabling organizations to thrive in the dynamic digital landscape.