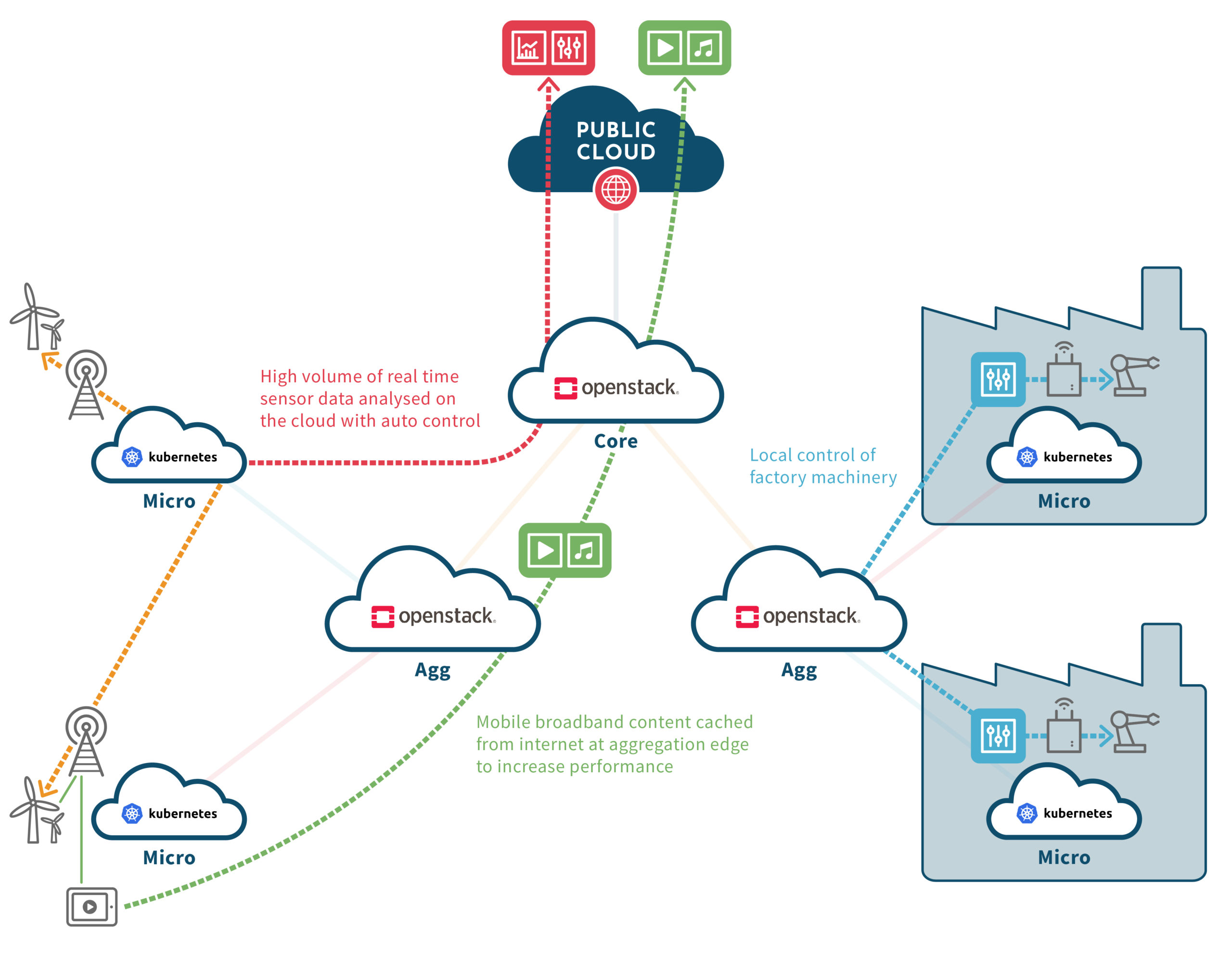

Application Deployment & The Various Deployment Types Explained

What is Deployment in Simple Words?#

Deployment is a process that enables you to retrieve and enable code from version control so that it can be made readily available to the public in an automated fashion. It involves delivering applications, modules, updates, and patches from developers to users. The methods used by developers to build, test, and deploy new code impact how quickly a product can respond to changes and the quality of each update.

What is the Use of Deployment?#

Deployment automation allows you to deploy software to testing and production environments with a single push. Automation reduces the risk associated with manual processes in the production environment.

There Are Six Types of Deployment#

- In-Place Deployment

- Blue/Green Deployment

- Canary Deployment

- Ramped Deployment

- Shadow Deployment

- A/B Testing Deployment

What is a Deployment Strategy in Application Deployment?#

A deployment strategy is a technique employed by DevOps teams to launch a new version of a software solution. These strategies cover how network traffic in a production environment is transitioned from the old version to the new version. Based on the firm's specialty, a deployment strategy can influence downtime and operational costs.

When it comes to deploying new resources and code versions into your production environment, automation with minimal service interruption is ideal. A deployment strategy is important because it reduces manual configuration and tremendously improves serviceability, as well as reducing the amount of downtime during a deployment.

1. In-Place Deployments#

An in-place deployment updates the application version without replacing infrastructure components. The previous version of the application on each compute resource is stopped, the latest application is installed, and the new version is started and validated. This method minimizes infrastructure costs and management overhead but can affect application availability during deployment.

The deployment process involves updating the infrastructure with new code and restarting the application.

Once the new version is deployed on every resource, the deployment is complete.

In-place deployments are cheaper but can cause application downtime. Mitigation strategies include staggering deployments and ensuring sufficient resources to handle demand.

2. Blue/Green Deployment#

The blue/green deployment strategy involves creating two independent infrastructure environments. The blue environment contains the previous version, while the green environment holds the new version. Traffic is shifted to the green environment, and the DNS record is updated to point to Green's load balancer.

This strategy allows for quick rollbacks in case of failure but incurs additional costs due to running two environments simultaneously.

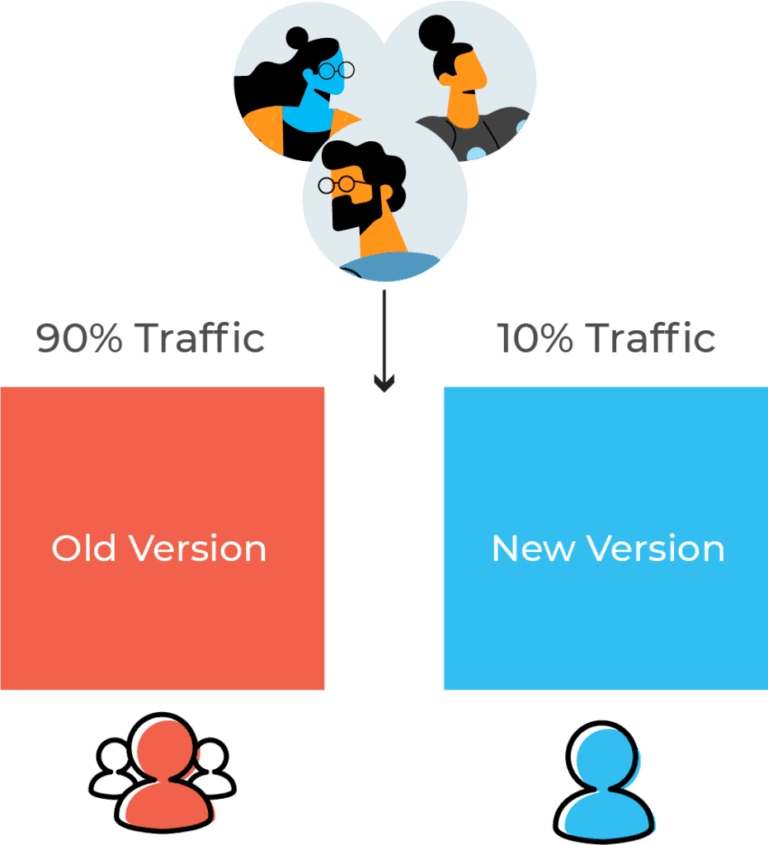

3. Canary Deployment#

In canary deployment, the new version is gradually introduced while retaining the old version. For example, 10% of traffic might go to the new version while 90% remains with the old version. This approach helps test the stability of the new version with live traffic.

Canary deployment allows for better performance monitoring and faster rollback but can be slow and time-consuming.

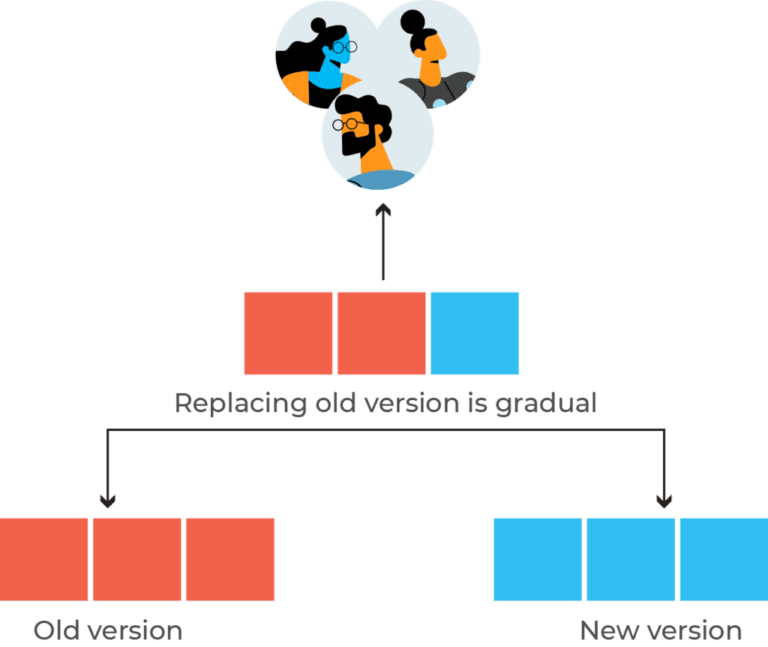

4. Ramped Deployment#

The ramped deployment strategy gradually replaces instances of the old version with the new version one at a time. This method ensures zero downtime and enables performance monitoring.

The rollback process is lengthy, as it involves reverting instances one by one.

5. Shadow Deployment#

In shadow deployment, the new version is deployed alongside the old version, but users cannot access it immediately. Requests sent to the old version are copied to the shadow version to test its handling.

This strategy allows for performance monitoring and stability testing but is complex and expensive to set up.

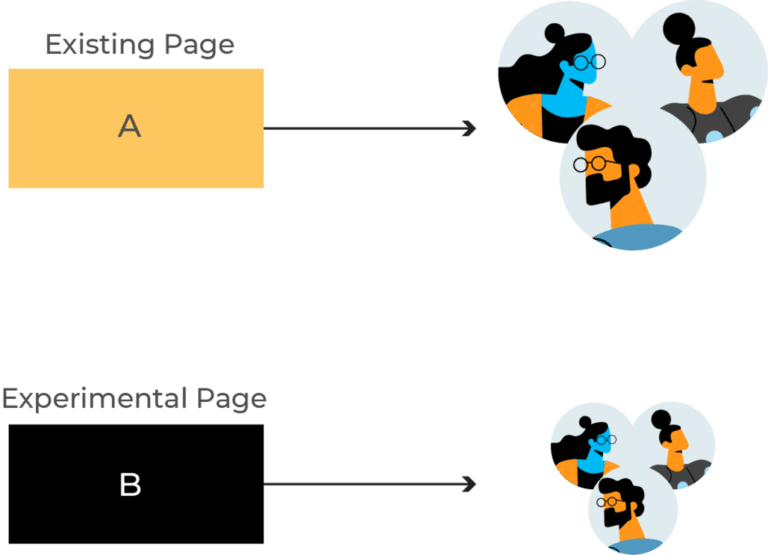

6. A/B Testing Deployment#

A/B testing deployment involves deploying the new version alongside the old version, but only a subset of users can access the new version. This approach measures the effectiveness of the new functionality based on user performance.

Statistics from A/B testing help developers make informed decisions, but setting up A/B testing requires a sophisticated load balancer and is complex.