In today's rapidly evolving technological landscape, mastering the Well-Architected Framework is not just crucial—it's the compass guiding businesses toward resilient, high-performing, and efficient cloud solutions.

A large number of businesses in recent years have shifted towards a cloud environment. But the question is does mere adoption solve all the problems? No, adoption does not guarantee cost-effectiveness and operational efficiency.

This is where a well-architected framework steps in to fill the gap. It was developed by Amazon Web Services (AWS), a leading cloud computing platform. It's a set of practices designed to help businesses implement secure, reliable, cost-effective, and efficient cloud architecture.

A periodic review of your cloud architecture and framework is crucial to ensure your cloud solution meets the highest standard of security, reliability, and efficiency. In this article, we'll explore the world of well-architected framework review, exploring benefits and significance. Businesses can maximize their cloud investment by implementing best practices and identifying areas for improvement.

Let's dive into the article. We'll start by understanding the pillars of a well-architected framework.

Understanding Key Pillars of Well-Architected Framework#

A well-architected framework is crucial for creating applications and infrastructure in the cloud. The framework is built around 5 key pillars. Each pillar addresses the critical aspect of resilient, efficient, and robust architecture that aligns with business goals.

Security: Security is an essential pillar of the framework. There is always a risk of cyber-attacks and data breaches. The security pillar emphasizes the implementation of access and identity controls, and encryption. Security is vital to ensure data integrity and confidentiality throughout an application's lifecycle.

Reliability: Reliability is another pillar of a well-architected framework. This pillar emphasizes the design of the application, the application should be able to recover from failures instantly. It significantly affects the user experience. By leveraging scaling and fault tolerance organizations can ensure high availability and minimal downtime, boosting the customer experience.

Performance Efficiency: Performance is another essential pillar of the framework. By monitoring and ensuring reliability organizations can increase the response time and efficiency of the application deployment process. By incorporating best practices based on the data available, organizations can cost-optimize and provision workloads effectively.

Cost Optimization: Cost optimization while maintaining high quality is a challenge. The cost optimization pillar guides businesses to identify cost drivers and leverage cloud-native applications to maintain the desired level of application. By analyzing patterns organizations can

Operational Excellence: The operational excellence pillar of the framework enhances operations through best practices and strategies. These practices include automation, continuous improvement, and streamlined management.

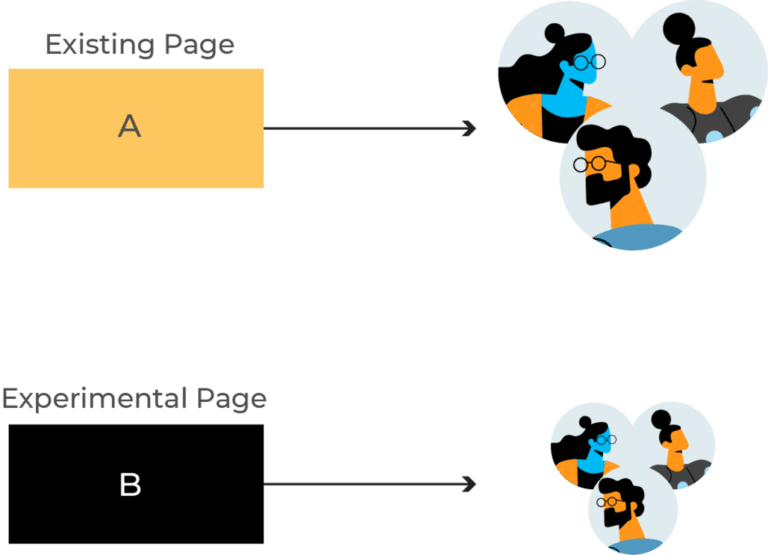

The Well-Architected Framework Review involves assessing an architecture against these five pillars. AWS provides a set of questions and considerations for each post, allowing organizations to evaluate their systems and identify areas for improvement.

Now let's review some components of a well-architected framework. We'll review the significance of each component. We'll also explore strategies and best practices.

Data Integrity Considerations in a Well-Architected Framework:#

Data Integrity is a crucial aspect of a well-architected framework in cloud optimization. In recent years the volume of cyber attacks on IT companies has skyrocketed. Organizations store sensitive data of users and other organizations. Data breaches not only affect the reputation of the organization but also put users at risk.

Because of sensitive user data, many industries have regulations for data integrity. So data breaches also open up an organization to legal consequences. Cyber attacks also affect the operational and decision-making capacity of an organization.

To ensure data integrity organizations can utilize encryption, access control, identity management, and Backup and recovery features.

Encryption helps protect both data at rest and in transit. Strong encryption is crucial for data protection even in case of disaster.

Strong IAM ( Identity and Access Management) is also vital for data integrity, access should be granted based on assigned roles.

Cost Optimization in a Well-Architected Framework#

Cost optimization is a critical aspect of cloud architecture, especially in the financial services industry. Financial services industry workloads are different and cost challenges are unique. In other industries, cost optimization is relatively easy.

The finance industry, however, has a lot of strings attached, such as regulatory compliance, sensitive user data, and demanding workloads. This section explores practices to ensure cost optimization.

Financial services industry workloads are data intensive and require real-time processing. This need for storage and processing power can increase cloud costs significantly if not managed properly.

For cost optimization analyze workloads and provision resources accordingly. For significant cost reduction, you can utilize automating scaling and spot instances. Automating scaling automatically adjusts the resources according to demand whereas spot instances allow you to use spare computer capacity at a fraction of on-demand price.

Release Management: Seamless Deployment and Change Control#

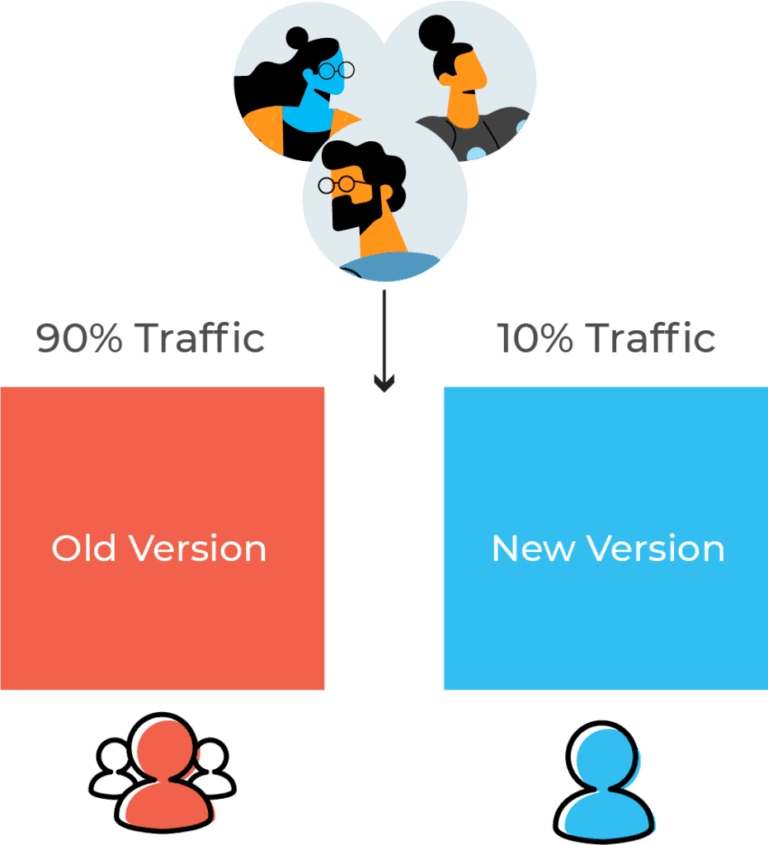

Release management is a crucial aspect of cloud architecture. Release management ensures new features, updates, and bug fixes reach the end user quickly and the application works smoothly. It is the pillar of the Well-Architected Framework that ensures smooth software deployment and version control.

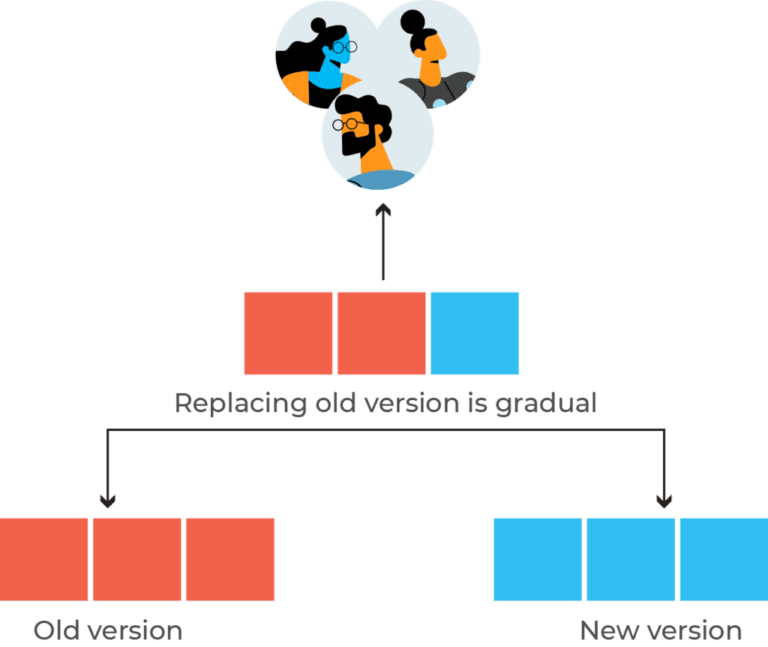

Effective release management strategies include automation, version control, and seamless release cycles. Implementing automated testing ensures code is of high quality and bugs and other errors are caught early in the development stage. Automation in software development ensures the development lifecycle becomes efficient and the chances of human error are reduced.

Version control is an essential consideration for seamless deployment. Version control stores code history from the start of development. Version control ensures errors are identified and fixed quickly. Branching is another helpful strategy, you can utilize it to work on new features without affecting the code. Prepare rollback plans in case of failed deployments.

Release management practices in a well-architected framework offer several benefits including consistency, flexibility, reduced risks, and faster time to market.

Monitoring Performance and Ensuring Reliability:#

Performance and Reliability are crucial in a cloud architecture. Performance and reliability directly impact the user experience. The monitoring performance and reliability pillar within a well-architected framework emphasizes real-time monitoring and proactive cloud optimization.

Monitoring performance in real-time is crucial to ensure the proper functioning of the application. Monitoring performance helps identify and resolve problems early on. Another benefit of real-time monitoring is you can identify and remove security bottlenecks.

Monitor key metrics and design a sustainable cloud architecture. Design mechanism for automated recovery and automated scaling. Use load-balancing techniques for better sustainability. Also, design your cloud architecture with a failover mechanism for high availability.

Monitoring performance and reliability practices offer several benefits which include proactive capacity planning, resilience, and timely issue resolution.

Sustainability and Scalability in Architecting Workloads:#

In a well-architected framework, the sustainability pillar is about managing the workload in a way that not only meets the current needs but also prepares for future demands. For better sustainability and scalability architect workloads to make optimal use of resources.

Some successful strategies for scalability and sustainability are autoscaling and serverless architecture. Auto-scaling automatically scales up and down resources according to demand. Utilize serverless architecture to automatically scale applications without the need for physical servers.

For long-term growth use microservices application architecture where each component works independently. Use cloud models that best match your long-term plans. Architect designs to accommodate new technologies and stay up to date.

Managing Containerized Workloads in the Framework Review:#

Containerization is a revolutionary approach to application development. It enhances agility, scalability, and reliability within the cloud environment. Managing Containerized workloads within a well-architected framework focuses on optimizing applications with the help of container technology. A popular technology for managing containerized workloads is Docker and a popular orchestration tool is Kubernetes.

Containers provide an environment where an application can be placed with its dependencies to ensure consistency. Managing containerized workloads helps scale applications efficiently.

One of the popular orchestration tools is Kubernetes. It automates the development life cycle and management of applications. Implement best practices for required results. Scan images for vulnerabilities, monitor resources to ensure proper provision, and utilize automation for best results.

Implementing Containerization and orchestration within a well-architected framework aligns with Performance Efficiency, Reliability, and Operational Excellence.

Serverless Applications for Operational Efficiency:#

Serverless application architecture within a well-architected framework focuses on operational efficiency, cost-effectiveness, and scalability. In recent years serverless architecture has wholly revolutionized the software development landscape. Organizations are focused on the build, test, and deployment lifecycle of code rather than the underlying infrastructure.

Serverless architecture provides real-time processing power and is suitable for event-driven applications such as transaction and report generation etc. The best use case of serverless applications is financial services industry workloads., where real-time processing is required all the time.

A combination of serverless applications and monitoring tools can provide cost optimization, scalability, and efficiency. Organizations can achieve operational excellence and efficiency by implementing serverless applications.

Nife Labs: Revolutionizing Cloud Solutions with a Hybrid Approach#

Introducing Nife Labs a hybrid cloud computing platform that helps businesses navigate through complex modern cloud computing.

Nife Labs bridges gaps in cloud architecture, aligning with the Well-Architected Framework's principles.

Nife ensures data security through encryption and efficient key management. It offers pricing options suited for varied workloads. It streamlines development facilitating agile and reliable releases.

Elevate Your Cloud Experience with Nife Labs. Explore Now!

Conclusion:#

In conclusion, the Well-Architected Framework acts as a guide to organizations seeking cloud optimization. From data integrity and cost optimization to release management and cutting-edge practices like serverless computing, its pillars provide a roadmap to success. For the Financial Services Industry workloads, these practices ensure security and scalability. By adhering to this framework, businesses forge adaptable, efficient, and secure pathways to navigate the complexities of modern cloud computing.