AI-driven Businesses | AI Edge Computing Platform

Can an AI-based edge computing platform drive businesses or is that a myth? We explore this topic here._

Introduction#

For a long time, artificial intelligence has been a hot issue. We've all heard successful tales of forward-thinking corporations creating one brilliant technique or another to use Artificial Intelligence technology or organizations that promise to put AI-first or be truly "AI-driven." For a few years now, Artificial Intelligence (AI) has been impacting sectors all around the world. Businesses that surpass their rivals are certainly employing AI to assist in guiding their marketing decisions, even if it isn't always visible to the human eye (Davenport et al., 2019). Machine learning methods enable AI to be characterized as machines or processes with human-like intelligence. One of the most appealing features of AI is that it may be used in any sector. By evaluating and exploiting excellent data, AI can solve problems and boost business efficiency regardless of the size of a company (Eitel-Porter, 2020). Companies are no longer demanding to be at the forefront or even second in their sectors; instead, businesses are approaching this transition as if it were a natural progression.

Artificial Intelligence's (AI-driven) Business Benefits#

Businesses had to depend on analytics researchers in the past to evaluate their data and spot patterns. It was practically difficult for them to notice each pattern or useful bit of data due to the huge volume of data accessible and the brief period in their shift. Data may now be evaluated and processed in real-time thanks to artificial intelligence. As a result, businesses can speed up the optimization process when it comes to business decisions, resulting in better results in less time. These effects can range from little improvements in internal corporate procedures to major improvements in traffic efficiency in large cities (Abduljabbar et al., 2019). The list of AI's additional advantages is nearly endless. Let's have a look at how businesses can benefit:

A More Positive Customer Experience: Among the most significant advantages of AI is the improved customer experience it provides. Artificial intelligence helps businesses to improve their current products by analyzing customer behavior systematically and continuously. AI can also help engage customers by providing more appropriate advertisements and product suggestions (Palaiogeorgou et al., 2021).

Boost Your Company's Efficiency: The capacity to automate corporate procedures is another advantage of artificial intelligence. Instead of wasting labor hours by having a person execute repeated activities, you may utilize an AI-based solution to complete those duties instantly. Furthermore, by utilizing machine learning technologies, the program can instantly suggest enhancements for both on-premise and cloud-based business processes (Daugherty, 2018). This leads to time and financial savings due to increased productivity and, in many cases, more accurate work.

Boost Data Security: The fraud and threat security capabilities that AI can provide to businesses are a major bonus. AI displays usage patterns that can help to recognize cyber security risks, both externally and internally. An AI-based security solution could analyze when specific employees log into a cloud solution, which device they used, and from where they accessed cloud data regularly.

Speaking with AI Pioneers and Newcomers#

Surprisingly, by reaching out on a larger scale, researchers were able to identify a variety of firms at various stages of AI maturity. Researchers split everyone into three groups: AI leaders, AI followers, and AI beginners (Brock and von Wangenheim, 2019). The AI leaders have completely adopted AI and data analysis tools in their company, whilst the AI beginners are just getting started. The road to becoming AI-powered is paved with obstacles that might impede any development. In sum, 99% of the survey respondents have encountered difficulties with AI implementation. And it appears that the more we work at it, the more difficult it becomes. 75% or more of individuals who launched their projects 4-5 years ago faced troubles. Even the AI leaders, who had more effort than the other two groups and began 4-5 years earlier, had over 60% of their projects encounter difficulties. When it comes to AI and advanced analytics, it appears that many companies are having trouble getting their employees on board. The staff was resistant to embracing new methods of working or were afraid of losing their employment. Considering this, it should be unsurprising that the most important tactics for overcoming obstacles include culture and traditions (Campbell et al., 2019). Overall, it's evident that the transition to AI-driven operations is a cultural one!

The Long-Term Strategic Incentive to Invest#

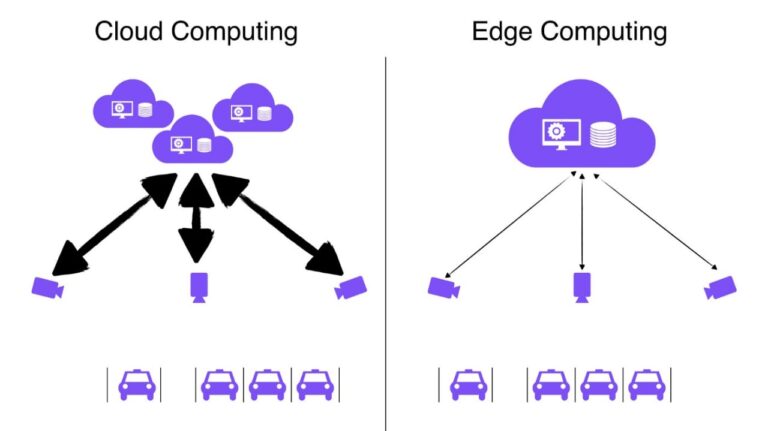

Most firms that begin on an organizational improvement foresee moving from one stable condition to a new stable one after a period of controlled turbulence (ideally). When developers look at how these AI-adopting companies envision the future, however, this does not appear to be the case. Developers should concentrate their efforts on the AI leaders to better grasp what it will be like to be entirely AI-driven since these are the individuals who've already progressed the most and may have a better understanding of where they're headed. It's reasonable to anticipate AI leaders to continue to outpace rival firms in the future (Daugherty, 2018). Maybe it's because they have a different perspective on the current, solid reality that is forming. The vision that AI leaders envisage is not one of consistency and "doneness" in terms of process. Consider a forthcoming business wherein new programs are always being developed, with the ability to increase efficiency, modify job processing tasks, impact judgment, and offer novel issue resolution. It appears that the steady state developers are looking for will be one of constant evolution. An organization in which AI implementation will never be finished. And it is for this reason that we must start preparing for AI Edge Computing Platform to pave the way for the future.

References#

- Abduljabbar, R., Dia, H., Liyanage, S., & Bagloee, S.A. (2019). Applications of Artificial Intelligence in Transport: An Overview. Sustainability, 11(1), p.189. Available at: link.

- Brock, J.K.-U., & von Wangenheim, F. (2019). Demystifying AI: What Digital Transformation Leaders Can Teach You about Realistic Artificial Intelligence. California Management Review, 61(4), pp.110–134.

- Campbell, C., Sands, S., Ferraro, C., Tsao, H.-Y. (Jody), & Mavrommatis, A. (2019). From Data to Action: How Marketers Can Leverage AI. Business Horizons.

- Daugherty, P.R. (2018). Human + Machine: Reimagining Work in the Age of AI. Harvard Business Review Press.

- Davenport, T., Guha, A., Grewal, D., & Bressgott, T. (2019). How Artificial Intelligence Will Change the Future of Marketing. Journal of the Academy of Marketing Science, 48(1), pp.24–42. Available at: link.

- Eitel-Porter, R. (2020). Beyond the Promise: Implementing Ethical AI. AI and Ethics.

- Palaiogeorgou, P., Gizelis, C.A., Misargopoulos, A., Nikolopoulos-Gkamatsis, F., Kefalogiannis, M., & Christonasis, A.M. (2021). AI: Opportunities and Challenges - The Optimal Exploitation of (Telecom) Corporate Data. Responsible AI and Analytics for an Ethical and Inclusive Digitized Society, pp.47–59.