Case Study: Scaling up deployment of AR Mirrors

AR Mirrors or Smart mirrors, the future of mirrors, is known as the world's most advanced Digital Mirrors. Augmented Reality mirrors are a reality today, and they hold certain advantages amidst COVID-19 as well.

Learn More about how to deploy and scale Smart Mirrors.

Introduction#

AR Mirrors are the future and are used in many places for ease of use for the end-users. AR mirrors are also used in Media & Entertainment sectors because the customers get easy usage of these mirrors, the real mirrors. The AI improves the edge's performance, and the battery concern is eradicated with edge computing.

Background#

Augmented Reality, Artificial intelligence, Virtual reality and Edge computing will help to make retail stores more interactive and the online experience more real-life, elevating the customer experience and driving sales.

Recently, in retail markets, the use of AR mirrors has emerged, offering many advantages. The benefits of using these mirrors are endless, and so is the ability of the edge.

For shoppers to go back to the stores, the touch and feel are the last to focus on. Smart Mirrors bring altogether a new experience of visualizing different garments, how the clothes actually fit on the person, exploring multiple choices and sizes to create a very realistic augmented reflection, yet avoiding physical wear and touch.

About#

We use real mirrors in trial rooms to try clothes and accessories. Smart mirrors have become necessary with the spread of the pandemic.

The mirrors make the virtual objects tangible and handy, which provides maximum utility to the users building on customer experience. Generally, as human nature, the normal mirrors in the real world more often to get a look and feel.

Hence, these mirrors take you to the virtual world, help you with looking at jewellery, accessories and even clothes making the shopping experience more holistic.

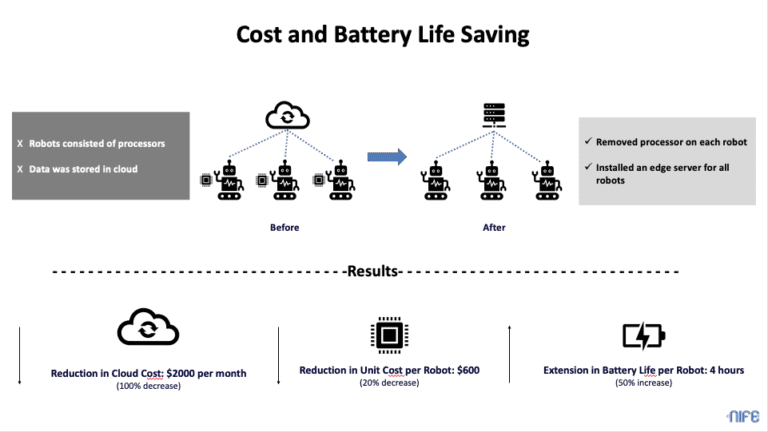

Smart Mirrors use an embedded processor with AI. The local processor ensures no lag when the user is using the Mirrors and hence provides an inference closest to the user. While this helps with the inference, the cost of the processor increases.

In order to drive large scale deployment, the cost of mirrors needs to be brought down. Today, AR mirrors have a high price, hence deploying them in retail stores or malls has become a challenge.

The other challenge includes updates to the AR application itself. Today, the System Integrator needs to go to every single location and update the application.

Nife.io delivers by using minimum unit architecture, each connected to the central edge server that can lower the overall cost and help to scale the application on Smart Mirror

Key challenges and drivers of AR Mirrors#

- Localized Data Processing

- Reliability

- Application performance is not compromised

- Reduced Backhaul

Result#

AR Mirrors deliver a seamless user experience with AI. It is a light device that also provides data localization for ease of access to the end-user.

AR Mirrors come with flexible features and can easily be used according to the user's preference.

Here, edge computing helps in reducing hardware costs and ensures that the customers and their end-users do not have to compromise with application performance.

- The local AI processing moves to the central server.

- The processor now gets connected to a camera to get the visual information and pass it on to the server.

Since the processing is moved away from the server itself, this helps AR mirrors also can help reduce battery life.

The critical piece here is lag in operations. The end-user should not face any lag, the central server then must have enough processing power and enough isolations to run the operations.

Since the central server with network connectivity is in the control of the application owner and the system integrator, the time spent to deploy in multiple servers is completely reduced.

How does Nife Help with AR Mirrors?#

Use Nife to offload device compute and deploy applications close to the Smart Mirrors.

- Offload local computation

- No difference in application performance (70% improvement from Cloud)

- Reduce the overall price of the Smart Mirrors (40% Cost Reduction)

- Manage and Monitor all applications in a single pane of glass.

- Seamlessly deploy and manage applications ( 5 min to deploy, 3 min to scale)