Computer Vision In Robotics: Enhancing Automation In AI

As we move towards the future, robots have a growing potential to take on a broader range of tasks due to advancements in robot vision technology. The ultimate goal is to create universal robots with more general skills, even if many robots specialize in specific tasks today.

Robots can see, analyze, and react to environmental changes using machine and computer vision algorithms, which may be essential to achieving this goal. This blog article will examine if computer vision and robotics work well together. However, it still needs to be clarified.

What is Robotics?#

Robotics is the study, creation, and use of robots that can replicate human behavior and help humans with various activities. Robotics may take many forms, such as human-like robots or automated programs like RPA that imitate human interaction with software to carry out repetitive tasks under predefined criteria.

Although the field of robotics and the exploration of robots' potential capabilities significantly expanded in the 20th century, the concept is not novel.

Robot Vision vs. Computer Vision#

There is a common misconception that these two ideas are equal. But in robotics and automation technologies, robot vision is a unique breakthrough. It makes it possible for machines, particularly robots, to comprehend their environment visually. Robot vision comprises software, cameras, and other equipment that helps develop robot visual awareness.

This skill enables robots to carry out complex visual tasks, such as picking up an item off a surface using a robotic arm that uses sensors, cameras, and vision algorithms to complete the operation.

On the other hand, computer vision develops algorithms that can analyze digital photos or movies to allow computers to see the world visually. Its main emphasis is on posture estimation, object identification, tracking, and picture categorization. However, the use of computer vision in the robotics sector is complicated and diverse, as we shall explore in the following parts.

Why Computer Vision in Robotics?#

If you are wondering why robotic vision alone is insufficient, consider the following:

- Robotic vision may incorporate elements of computer vision.

- Furthermore, visual data processing is imperative for robots to execute commands.

- The integration of computer vision in robotics is all-encompassing, spanning various disciplines and industries, from medical science and autonomous navigation to nanotechnology employing robots for daily operations.

This highlights the extensive layers encompassed under the umbrella of "computer vision applications in robotics."

Common Applications#

Visual feedback is crucial to the functioning and broad use of image and vision-guided robots in many industries. Many different robotics applications make use of computer vision, including but not limited to the following:

- Space robotics

- Industrial robotics

- Military robotics

- Medical robotics

1. Space Robotics#

The category of space robotics is quite broad and typically pertains to flying robots that are versatile and can encompass various components, such as:

- On-orbit servicing

- Space construction

- Space debris clean-up

- Planetary exploration and mining

The constantly shifting and unexpected environment is one of the biggest hurdles for space robots, making it difficult to complete tasks like thorough inspection, sample collecting, and planetary colonization. Even in the context of space exploration, the use of computer vision technology provides optimistic and practical answers despite the ambitious nature of space endeavors.

2. Military Robotics#

The integration of computer vision technology enables robots to perform a wider range of tasks, including military operations. The latest projections suggest that worldwide spending on military robotics will reach \$16.5 billion by 2025, and it is clear why: the addition of computer vision to military robots provides significant value. Robotics has evolved from a luxury to a necessity, with vision-enabled robot operations offering the following benefits:

- Military robot path planning

- Rescue robots

- Tank-based military robots

- Mine detection and destruction

The newest generation of robotics is poised to offer more sophisticated functionalities and a broader range of capabilities, taking inspiration from the abilities of human workers.

3. Industrial Robotics#

Any work needing human involvement can be automated partly or entirely within a few years. Therefore, it is not unexpected that computer vision technology is widely used in creating industrial robots. Robots can now execute a wide variety of industrial operations that go well beyond the limitations of a robot arm. This list of tasks would likely make George Charles Devol, often regarded as the father of robotics, proud:

- Processing

- Cutting and shaping

- Inspection and sorting

- Palletization and primary packaging

- Secondary packaging

- Collaborative robotics

- Warehouse order picking

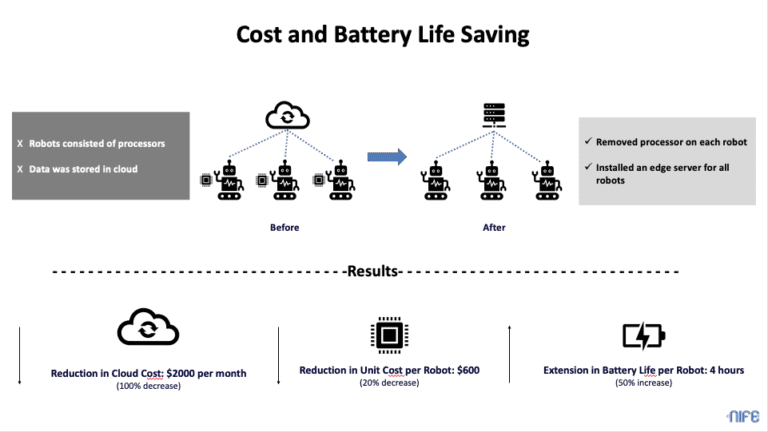

In addition, the growing interest of industrial sectors in computer vision robotics has numerous advantages. Firstly, robots can reduce production costs in the long run.

Secondly, they can provide better quality and increased productivity through robotics and automation.

Thirdly, they allow for higher flexibility in production and can address the shortage of employees quickly. These factors increase confidence and encourage further investment in robotics and computer vision-driven automation solutions in the industrial sector.

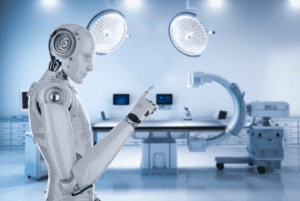

4. Medical Robotics#

The analysis of 3D medical pictures using computer vision positively affects diagnosis and therapy. The uses of computer vision in medicine, however, go beyond that. Robots are essential in the surgical area for pre-operative analytics, intraoperative guiding, and intraoperative verification. Robots may use vision algorithms to carry out the following tasks in particular:

- Sort surgery tools

- Stitch tissues

- Plan surgeries

- Assist diagnosis

In brief, robots ensure that the surgery plan and corresponding procedures align with the actual execution of surgeries related to the brain, orthopedics, heart, and other areas.

Computer Vision Challenges in Robotics#

The upcoming generation of robots is anticipated to surpass their conventional counterparts in terms of the skills they possess. The integration of computer vision and robotics is already a significant breakthrough and is likely to revolutionize the technology. However, the rapid progress in automation and the growing need for human-robot collaboration present several difficulties for the field of computer vision robotics.

- Recognizing and locating objects

- Understanding and mapping the scene

- 3D reconstruction and depth estimates

- Pose tracking and estimation

- Semantic division

- Visual localization and odometry

- Collaboration between humans and robots

- Robustness and flexibility in response to changing circumstances

- Performance and effectiveness in real-time

- Concerns about privacy and security in computer vision applications

Conclusion#

Robotics continues transforming various aspects of our lives and has become ubiquitous in almost every field. As human capabilities can only extend so far, automation and robotic substitutes are increasingly necessary for daily tasks.

However, such studies can only be achieved with visual feedback and the integration of computer vision into robot-guided interventions. This article has offered a comprehensive understanding of computer vision applications in the robotics industry.