Edge Computing Market trends in Asia

Edge Computing is booming all around the globe, so let us look in to what the latest Edge Computing Market trends in Asia are.

What is Edge Computing?#

The world of computing has been changing inter-dimensions venturing into new models and platforms. It is one such innovation that is an emerging concept of interconnected networks and devices which are nearby of one another. Edge computing results in greater processing speeds, with greater volumes to be shared among each user which also leads to real-time data processing. The model of edge computing has various benefits and advantages wherein the computing is conducted from a centralized data centre. With the growing knowledge about edge computing in organizations across the world, the trends are growing positively across all regions. The generation and growth of edge computing for enterprises in Asia is an incremental path with major countries' data consumers such as Singapore, China, Korea, India, and Japan looking to explore edge computing for IT-based benefits.

The emergence of the Asian Computing Market#

The development of the Asian computing market arises from the highest number of internet users in the countries like China, India, Singapore, Korea, and Japan. The development of the computing industry in small Asian countries such as Hong Kong, Malaysia, and Bangladesh has also created a demand for the adoption of global technologies like edge computing. These economies are converging towards digital currency and digital public services that aim to take advantage of edge computing. Asian emerging market is also undergoing rapid growth and transitioning into a technological industry base. The Philippines for example have been growing its internet user base with a 30% annual increment till 2025. Vietnam, another Asian country with a growing economy is also aiming to become to fastest-growing internet economy in the next decade. The demand of domestic nature is resulting creation of computing for Enterprises in Asia that are bound to give intense challenges to multinational IT companies.

Critical Importance of Edge Computing to Emerging Asian Markets#

The business centered on edge computing is creating a network of the most efficient process of social media, IoT, virtual streaming video platforms, and online gaming platforms. Edge computing offers effective public services offered through smart cities and regions. The trends for edge computing in Asia are increasing to reach \$17.8 billion within the next 3 years till 2025. Edge computing is the next big innovation that generates decentralized computing activities in data centres and business call centres. Edge computing can be used by various business industries to support the market presence of Asian markets. Nife for example has been gaining a lot of traction as one of the best application deployment platforms in Singapore for the year 2022. It offers one of the best edge computing platforms in Asia with clients in Singapore and India.

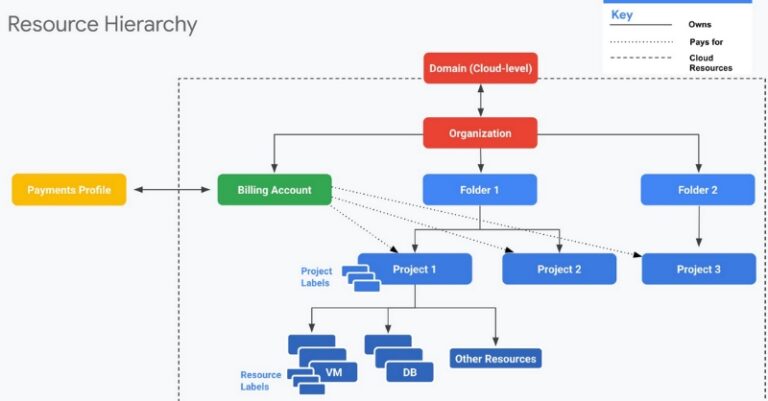

The development of Multi-cloud platforms in Asia is contributed to the high-skill workforce engaged in computer engineering. Businesses focused on digital tools and techniques, technology-based cross-collaboration between countries such as Singapore and India in the field of digital health, smart cities, and IT-based infrastructure is an example of edge computing for enterprises in Asia which is taken up by other Asian countries as well. Using edge computing platforms Asian business organizations are preventing the bottlenecks in infrastructure and services owing to a large number of consumers. The example of a multi-cloud platform in Singapore is notable for the benefits it is providing to business organizations. Nife as an organization is helping enterprises to build future business models to provide stronger digital experiences with an extra layer of security. The models based on the edge computing platforms are rapidly scalable and have a global scaling factor that can save cost when taking business in off-shore new markets.

Key Influencing trends supporting Edge Computing Market#

Edge computing is regarded as the best application deployment platform in Singapore as per the survey performed by Gartner in 2022. Various reasons are driving the edge computing used for enterprises in Asia based on low-latency processes and the influx of big data. The use of IoT, Artificial Intelligence, and the adoption of 5G is fostering the development of multi-clouding platforms. There are key trends that are shaping the development and growth of edge computing in the Singapore/Asian market and are illustrated as follows:

- IoT growth: Edge computing facilities the sharing of data when IoT devices are interconnected creating more secure data sharing with faster speed. The use of IoT devices based on edge computing renders optimization in real-time actions.

- Partnerships and acquisitions: the application of multi-cloud computing ecosystems is still developing in Asia based on service providers to connect with networks, cloud and data centre providers and enterprising the IT and industrial applications.

Conclusion#

Edge computing development in Singapore/Asia is surfaced as the best application deployment platform. The progress of edge computing is changing business development in the Asian market. The trends of greater application in the Asian market are reflected based on the growing number of internet users which is probably the largest in the world, adoption of the digital economy as a new model of industrial and economic development by most Asian countries such as Hong Kong, Malaysia, Thailand, India, and China. Such factors are positively helping local Edge Computing Enterprises to grow and compete in the space of multi-cloud services against the best in the world.

You can also check out the latest trends in the Gaming industry here!