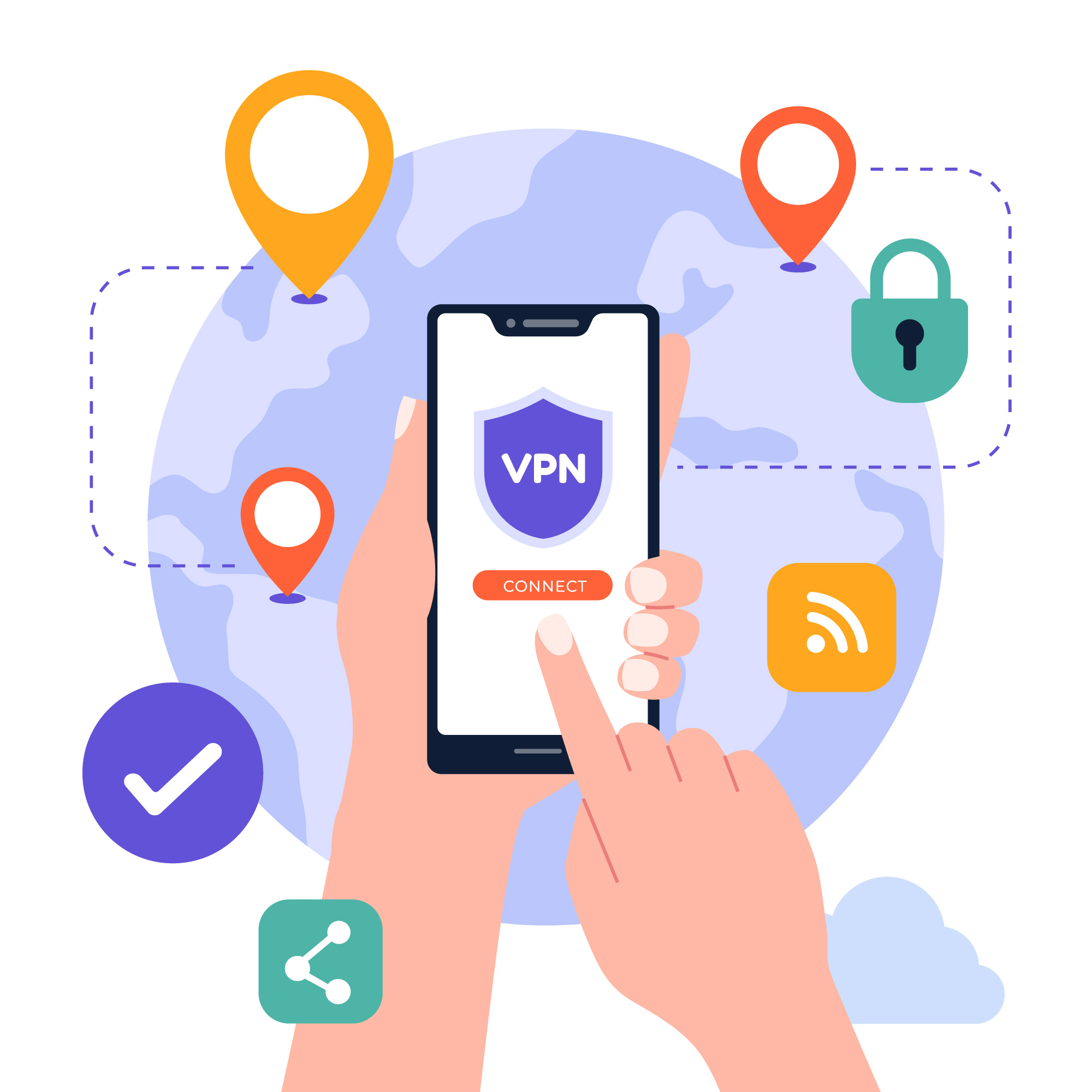

Internet users are increasingly using virtual private networks, or VPNs, as privacy concerns rise in the contemporary digital era. But first of all, what is a virtual private network (VPN), how does it work, and is one required? Let us analyse it.

What is a Virtual Private Network?#

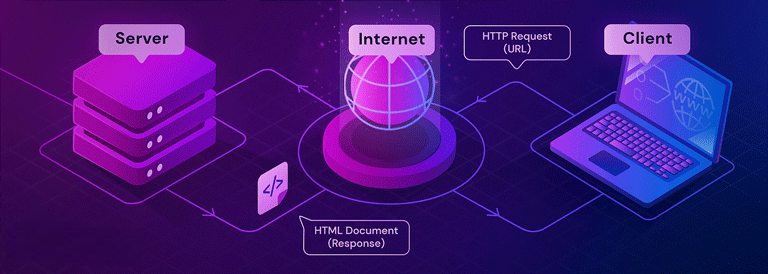

A service called a Virtual Private Network (VPN) establishes a safe, encrypted connection between your device and the internet. It functions as a tunnel that conceals your internet activity from government surveillance, your internet service provider (ISP), and other potential snoopers.

Through a remote server run by the VPN provider, your data is routed when you connect to the internet. By encrypting the data and hiding your IP address, this makes it more difficult for outside parties to monitor your online activities or steal your personal information.

How Do VPNs Operate?#

This is a condensed description of the actions a VPN does to protect you:

1. Establishing a VPN Server Connection#

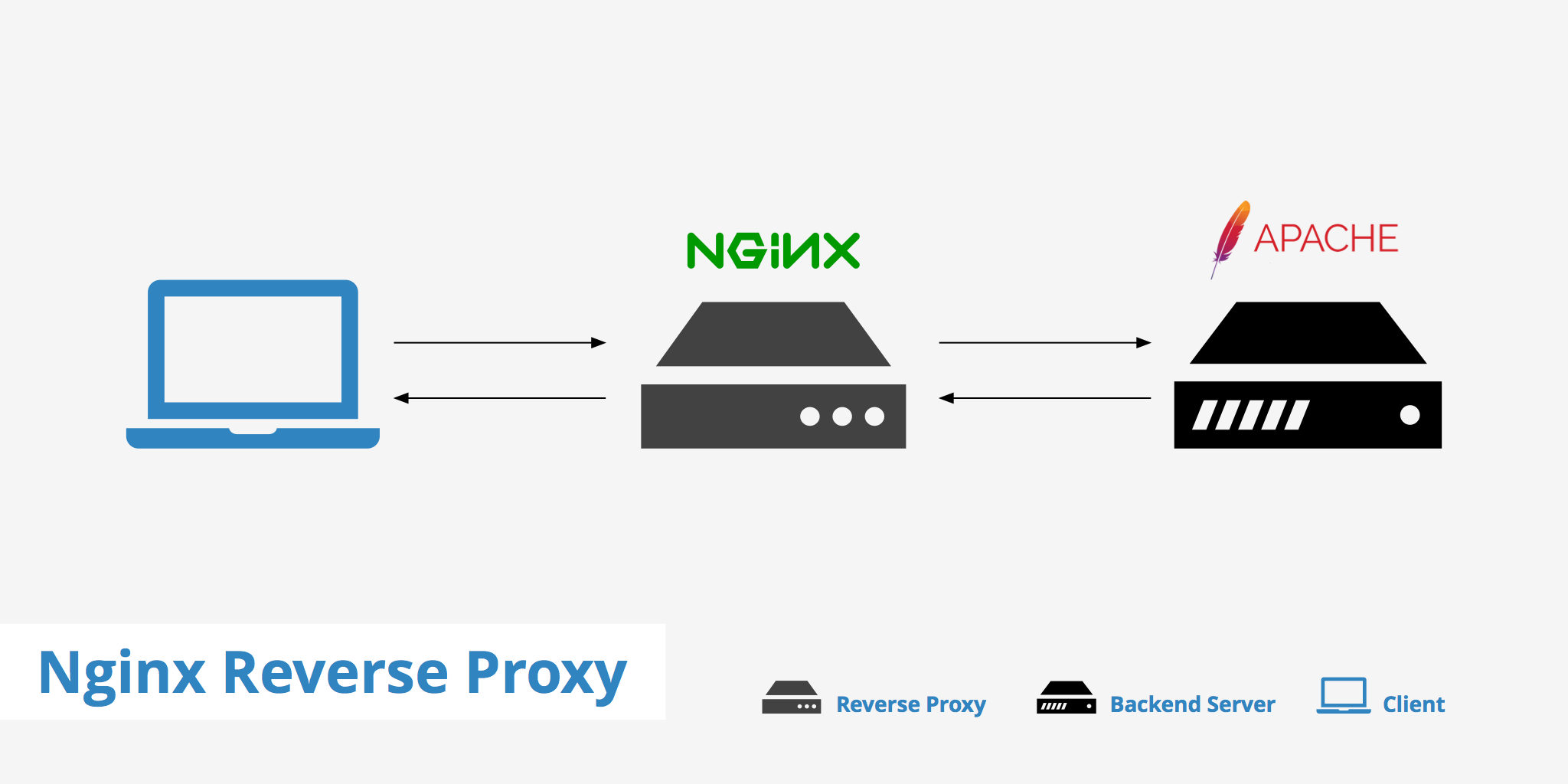

Your device connects to a VPN server, which may be situated anywhere in the world, when you turn on a VPN. Between your device and the websites or services you're attempting to access, this server will serve as a go-between.

2. Encryption of Data#

All data sent from your device to the server is encrypted by the VPN once it is connected. This implies that the data cannot be read even if it is intercepted (for example, on a public Wi-Fi network). Learn more about VPN encryption.

3. Masking of IP Addresses#

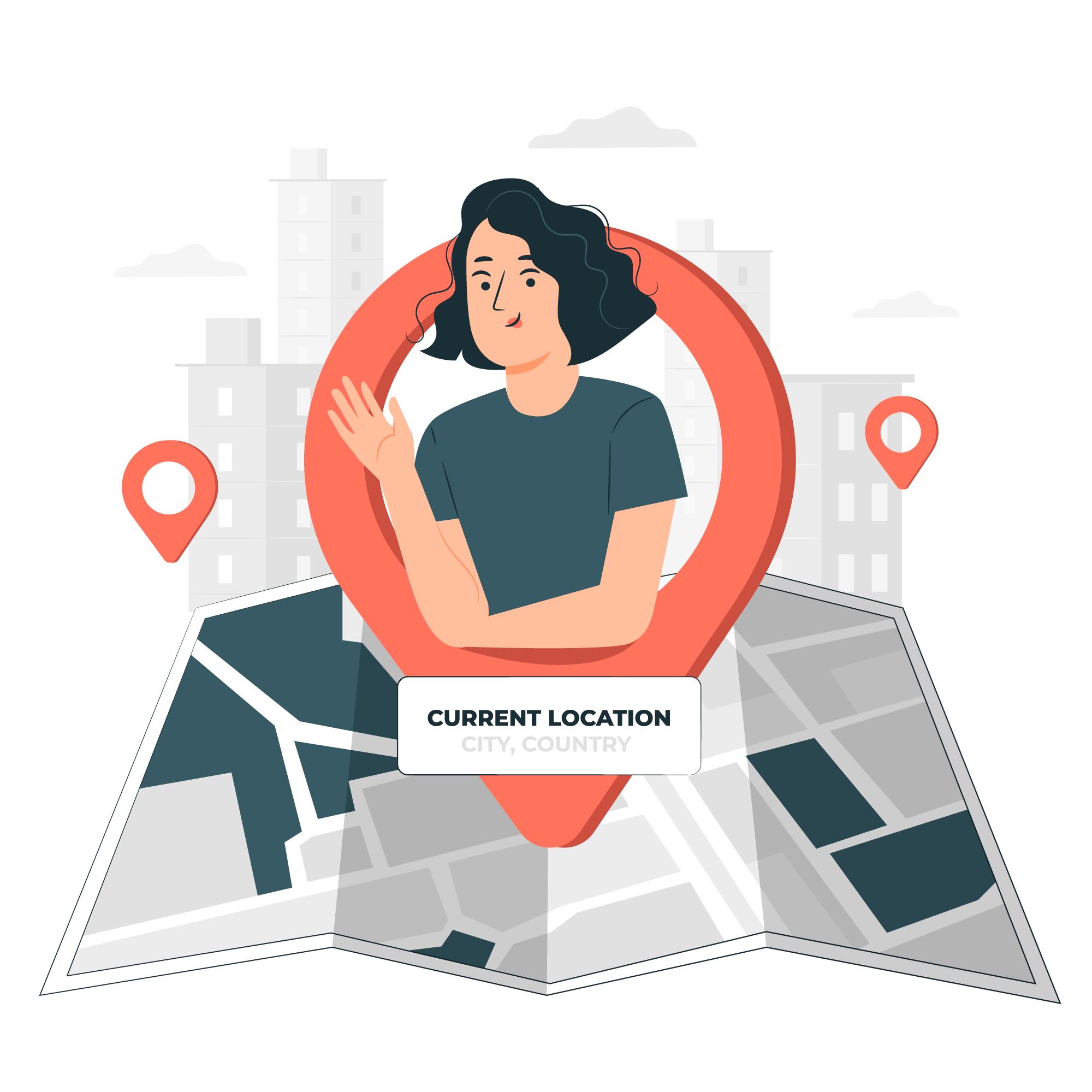

Then, using the server's IP address rather than your own, the VPN server forwards your request to the website or service you're attempting to access. This gives the impression that the request is originating from the VPN server rather than your real location.

4. Safe Internet Access#

Data received back by the website or service is first decrypted by the VPN server before being sent back to your device. You can browse the web as usual, but with the increased privacy and security that comes with all of this happening in real-time.

Businesses also rely on advanced security solutions to protect their infrastructure. Read how Orel Zeke secured their cloud environment with Nife in this case study

What Makes a VPN Useful?#

1. Security and Privacy#

Protecting your privacy is one of the main justifications for using a VPN. By encrypting your data, virtual private networks (VPNs) make it nearly impossible for hackers or government organizations to track your online activities. This is particularly crucial while utilizing public Wi-Fi networks, as these are frequently the target of fraudsters looking to steal personal data.

2. Obtaining Geo-Restricted Information#

VPNs can also assist you in getting over geo-restrictions, which is helpful if you want to view content that is restricted to particular areas. To access streaming services like Netflix, Hulu, or BBC iPlayer that may be blocked in your country, for instance, you can use a VPN. The service can be tricked into believing that you are in a different region by connecting to a server in a different location. Read more about geo-blocking and how to bypass it.

3. Avoid Restrictions#

Some nations have governments that prohibit access to particular websites or services. If you live or travel in a nation with tight internet censorship, such as China or Iran, a virtual private network (VPN) can let you get beyond these limitations and access the open internet.

4. Secure Online Banking and Buying#

A VPN adds an additional degree of security while accessing financial information or making online purchases by encrypting your connection. It guarantees that your financial information is protected from possible cyberattacks, particularly while using unprotected networks like public Wi-Fi.

5. Privacy and Steering Clear of Tracking#

By hiding your true IP address, a VPN can help you stay anonymous when using the internet. Your IP address is used by websites to track your surfing activity, and this information can be used to target advertisements. A VPN allows you to prevent this tracking and make your online experience more private.

When is a VPN Actually Necessary?#

Even while VPNs have many advantages, not all internet users need them. A VPN is most helpful in the following scenarios:

1. When Using Wi-Fi in Public#

Public Wi-Fi networks, such as those found in coffee shops, hotels, and airports, are frequently unprotected. These networks make it simple for cybercriminals to intercept your data and steal your personal information. By encrypting your internet activity on public networks, a VPN offers protection.

2. When Getting to Know Private Information#

If you deal with sensitive data on a regular basis, such as banking information, medical records, or papers linked to your job, a virtual private network (VPN) provides an additional degree of protection when you access or send this data online.

3. While Observing Content Blocked by Regions#

A VPN can assist you in getting around these geographical limitations by connecting to a server in a nation where the content is available, such as when you're attempting to view a Netflix series, access a YouTube library, or use a service that is prohibited in your area.

4. When You'd Like to Remain Anonymous Online#

A VPN can be a useful tool for hiding your identity and preventing tracking if you value anonymity and don't want your IP address or surfing patterns to be monitored.

When a VPN May Not Be Necessary#

1. Everyday Surfing on Secure Networks#

You might not need a VPN if you're just utilizing a safe and reliable Wi-Fi network to browse the web at home without accessing critical information. Because HTTPS encryption is used by the majority of contemporary websites, your data is already protected while it is in transit.

2. Regarding Websites That Don't Need Privacy#

A VPN might not be very helpful if all you're doing is accessing websites like news sites, blogs, or forums that don't require you to log in or handle personal information. Nevertheless, it can still offer some extra privacy advantages.

3. Performance Issues#

The distance between your device and the VPN server, as well as the additional encryption process, can cause a VPN to slow down your internet connection. It might not be the greatest time to utilize a VPN unless security is a top concern if you're having trouble with poor speeds or an inconsistent connection.

Conclusion#

In short, a VPN is a powerful tool that can protect your privacy, secure your data, and give you more control over what you do online. Whether you're working on sensitive data, accessing restricted content, or simply browsing the web more securely, VPNs offer a significant layer of protection. However, it's important to weigh your needs—because, like any tool, VPNs are most effective when used for the right reasons.

For more insights on secure and scalable cloud solutions, visit Nife.io.

If you're ready to take your online privacy seriously, using a VPN might just be the solution you need. So, go ahead, protect yourself, and surf the web without the fear of prying eyes.